Abstract

Existing rain image editing methods focus on either removing rain from rain images or rendering rain on rain-free images. This paper proposes to realize continuous control of rain intensity bidirectionally, from clear rain-free to downpour image with a single rain image as input, without changing the scene-specific characteristics, e.g. the direction, appearance and distribution of rain. Specifically, we introduce a Rain Intensity Controlling Network (RICNet) that contains three sub-networks of background extraction network, high-frequency rain-streak elimination network and main controlling network, which allows to control rain image of different intensities continuously by interpolation in the deep feature space. The HOG loss and autocorrelation loss are proposed to enhance consistency in orientation and suppress repetitive rain streaks. Furthermore, a decremental learning strategy that trains the network from downpour to drizzle images sequentially is proposed to further improve the performance and speedup the convergence. Extensive experiments on both rain dataset and real rain images demonstrate the effectiveness of the proposed method.

Method

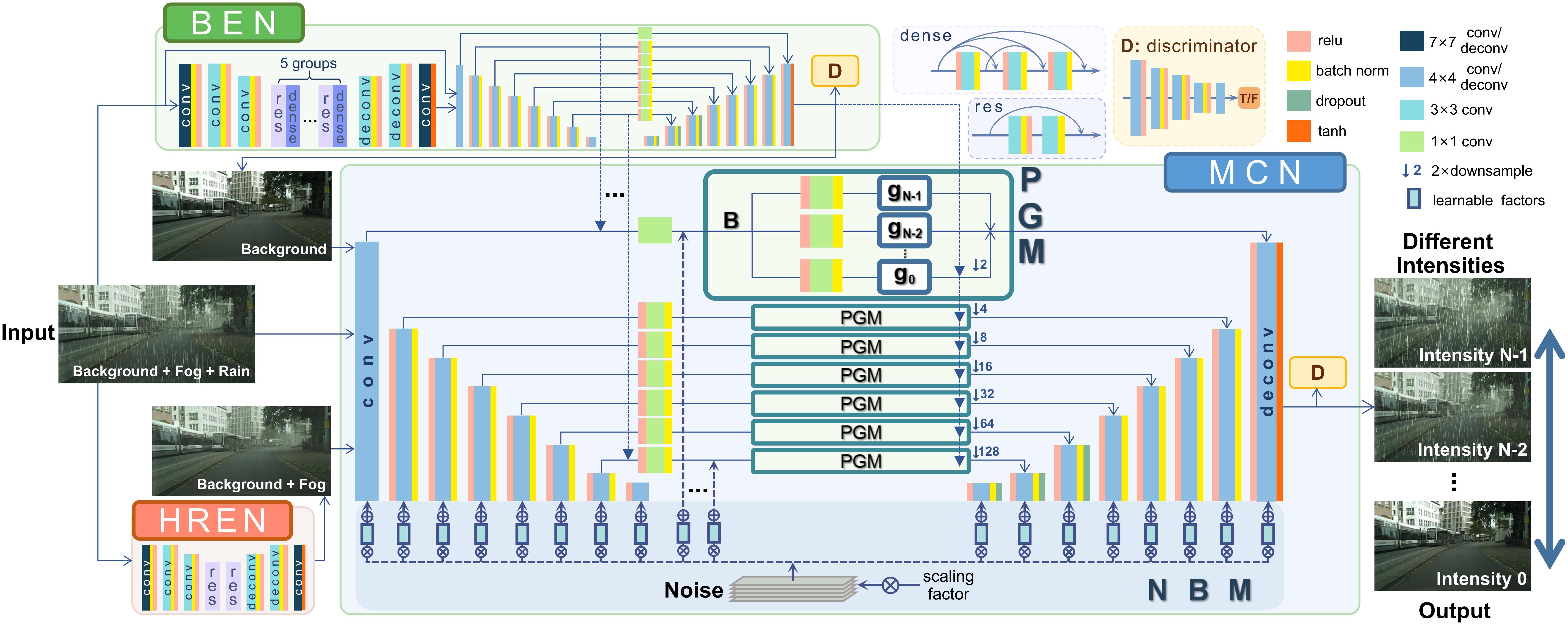

We propose a Rain Intensity Controlling Network (RICNet) , which allows continuous and bidirectional control of the rain from removal to rendering with different intensities and similar scene-specific characteristics from a given rain image. RICNet is composed of three sub-networks, i.e, Background Extraction Network (BEN), High frequency Rain-streak Elimination Network (HREN) and Main Controlling Network (MCN). Facilitated by the background and fog related information extracted by BEN and HREN, MCN could generate rain images of different intensities continuously and bidirectionally with the dual control of Parallel Gating Module (PGM) and Noise Boosting Module (NBM), based upon a multi-level interpolation framework. To preserve the scene-specific rain characteristics and reduce the repetition of rain streaks generated by the rendering network, histogram of oriented gradient and autocorrelation loss are proposed. Besides, a decremental training strategy that trains the network from downpour to drizzle images sequentially is also used to further improve the performance of proposed method.

Figure 1. The overall architecture of RICNet, composed of BEN, HREN and MCN

Experiments

Rain Removal

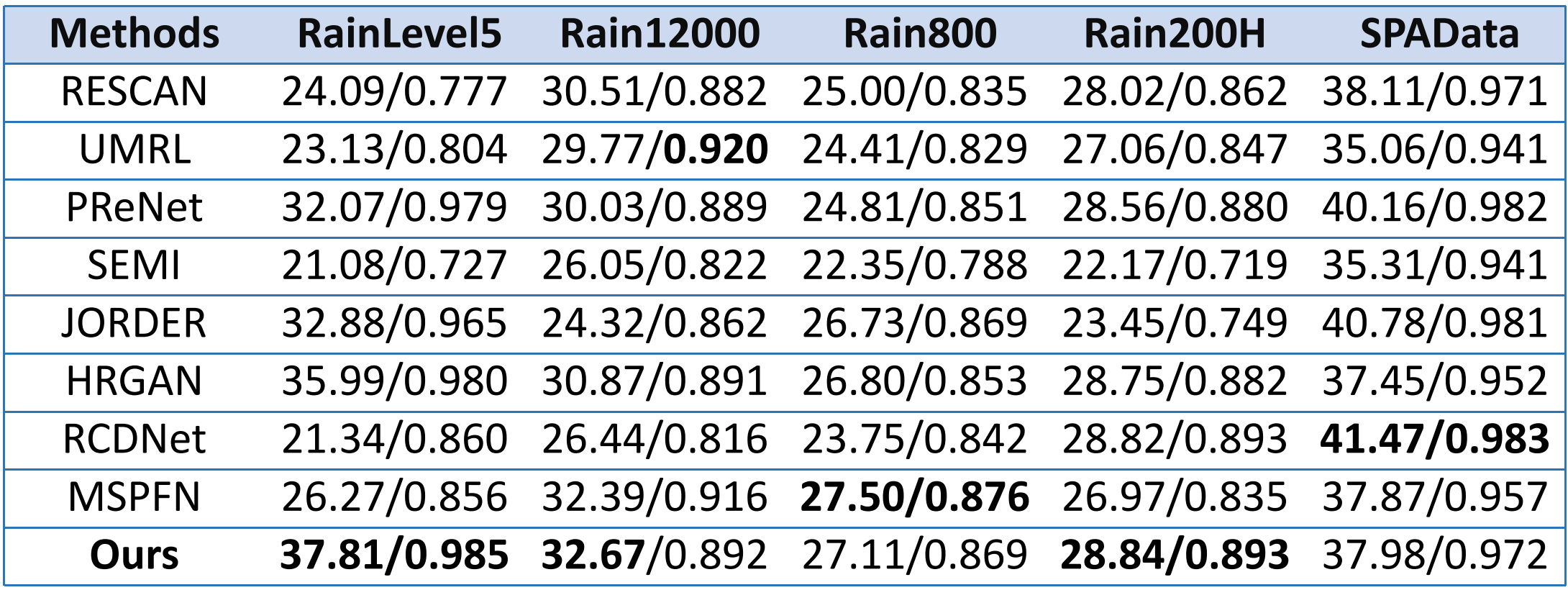

Table 1. Derained comparisons on synthetic datasets (PSNR/SSIM).

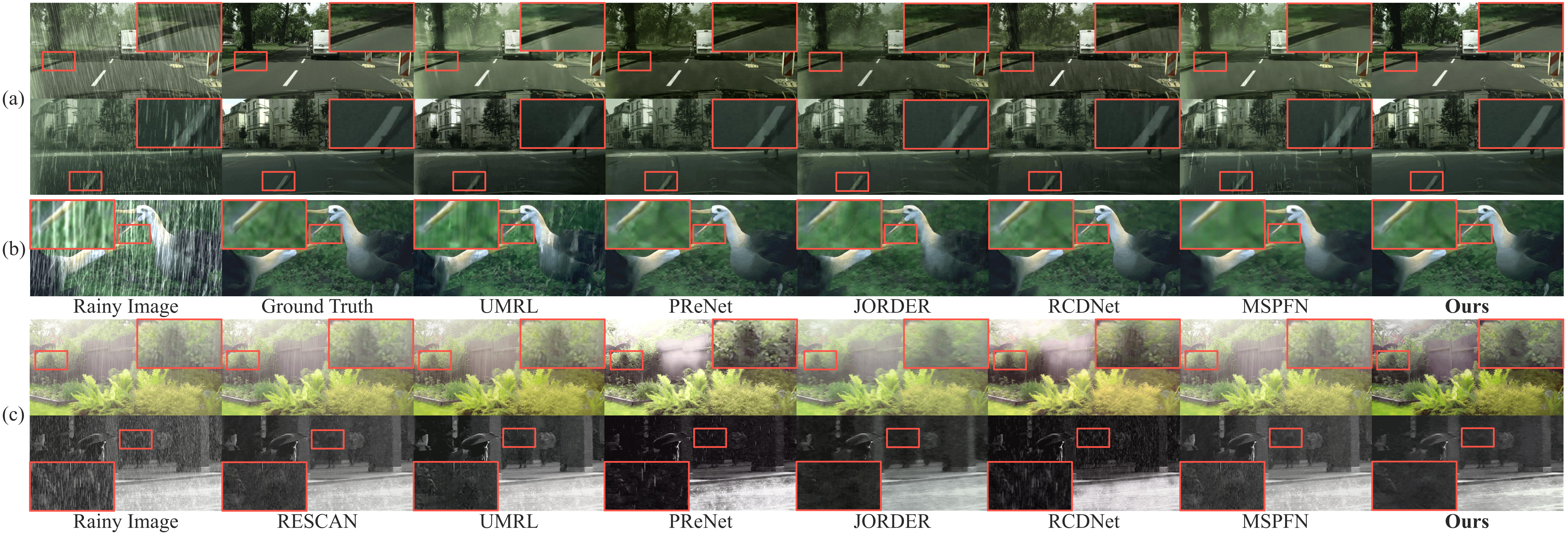

Figure 2. Derained results on synthetic images of (a) RainLevel5, (b) Rain200H and (c) real images.

Rain Control

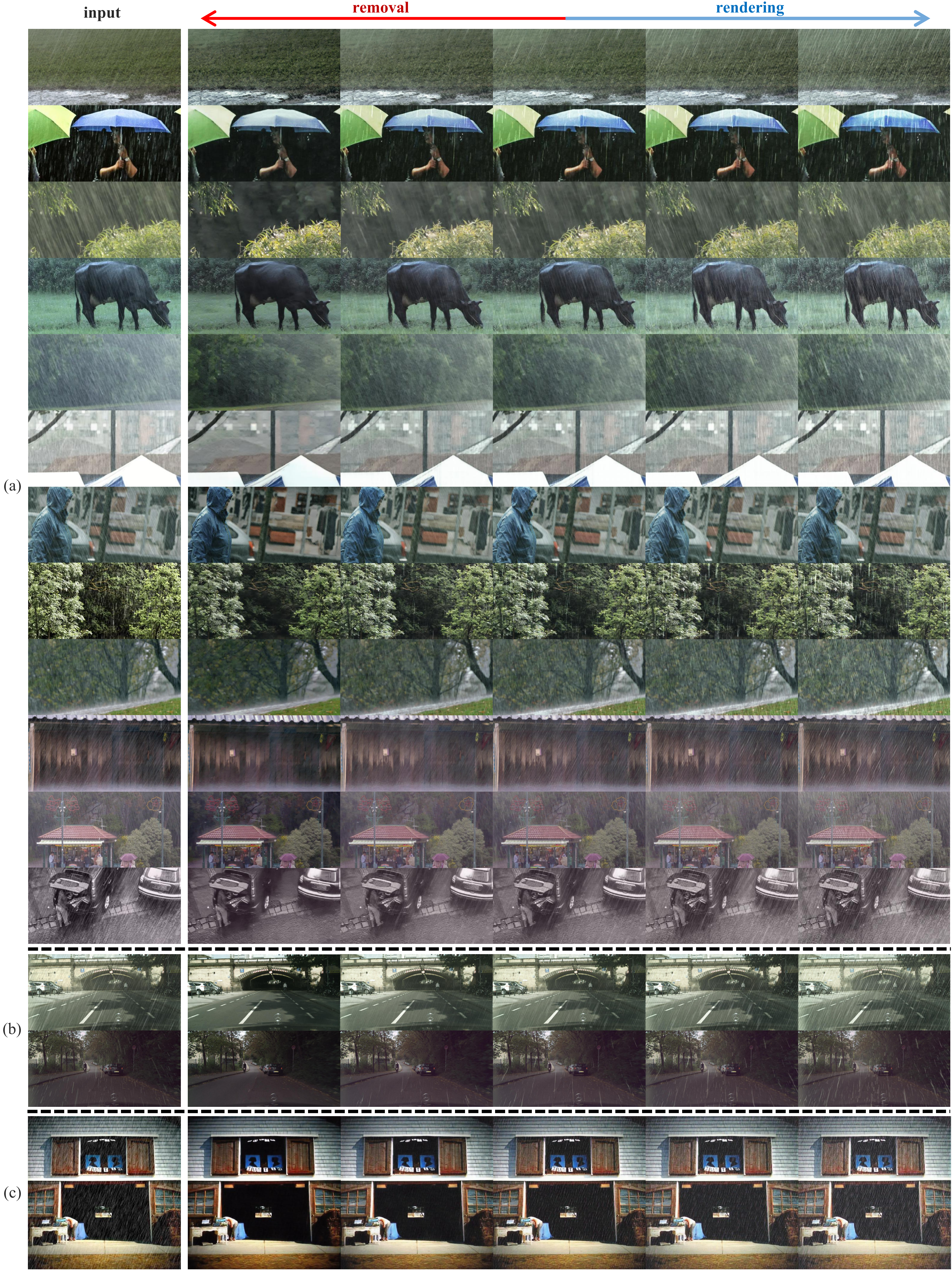

Figure 3. Rain control results on (a) real-world rain images and synthetic rain images in (b) RainLevel5 and (c) Rain12000.

More Details

- File

- Presentation

Bibtex

@InProceedings{ni2021controlling,

author = {Ni, Siqi and Cao, Xueyun and Yue, Tao and Hu, Xuemei},

title = {Controlling the Rain: from Removal to Rendering},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021}

}